evaluate.py

Overview

This script runs the trained net on test data and evaluates the result. It provides several graphs as outputs that show the performance of the trained model.

The output path can be changed under the the "evaluation_path": option in the corresponding config file.

Inputs

- A config file

- Test data. All in one

.csvfile. This can also include the validation and training data (seetest_splitin config description). Path is defined in the config. - A trained model, path is also defined in the config.

Outputs

- Benchmark and error plots

Example Output Files

The output consists of the actual load prediction graph split up into multiple .png images and a metrics plot containing information about the model’s performance.

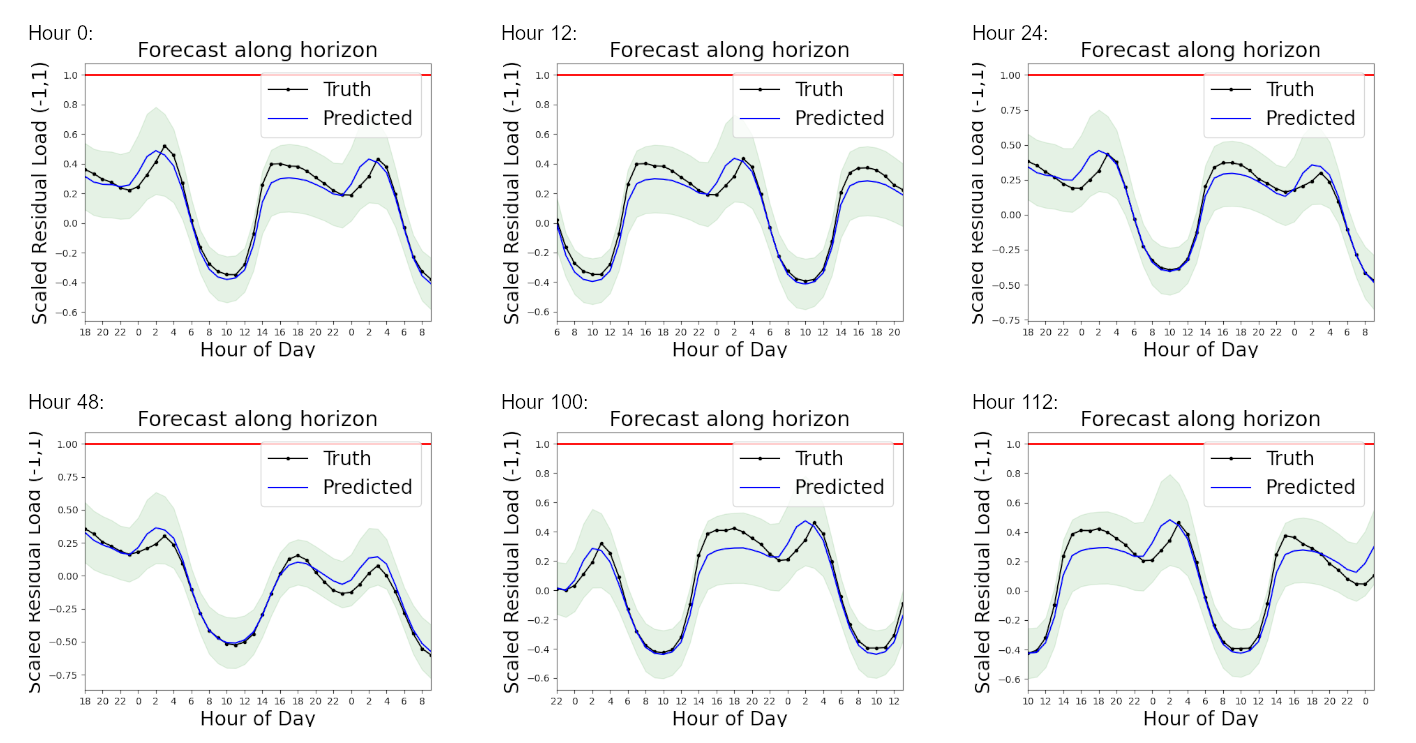

Plots of the predicted load at different hours

Plots of the predicted load at different hours

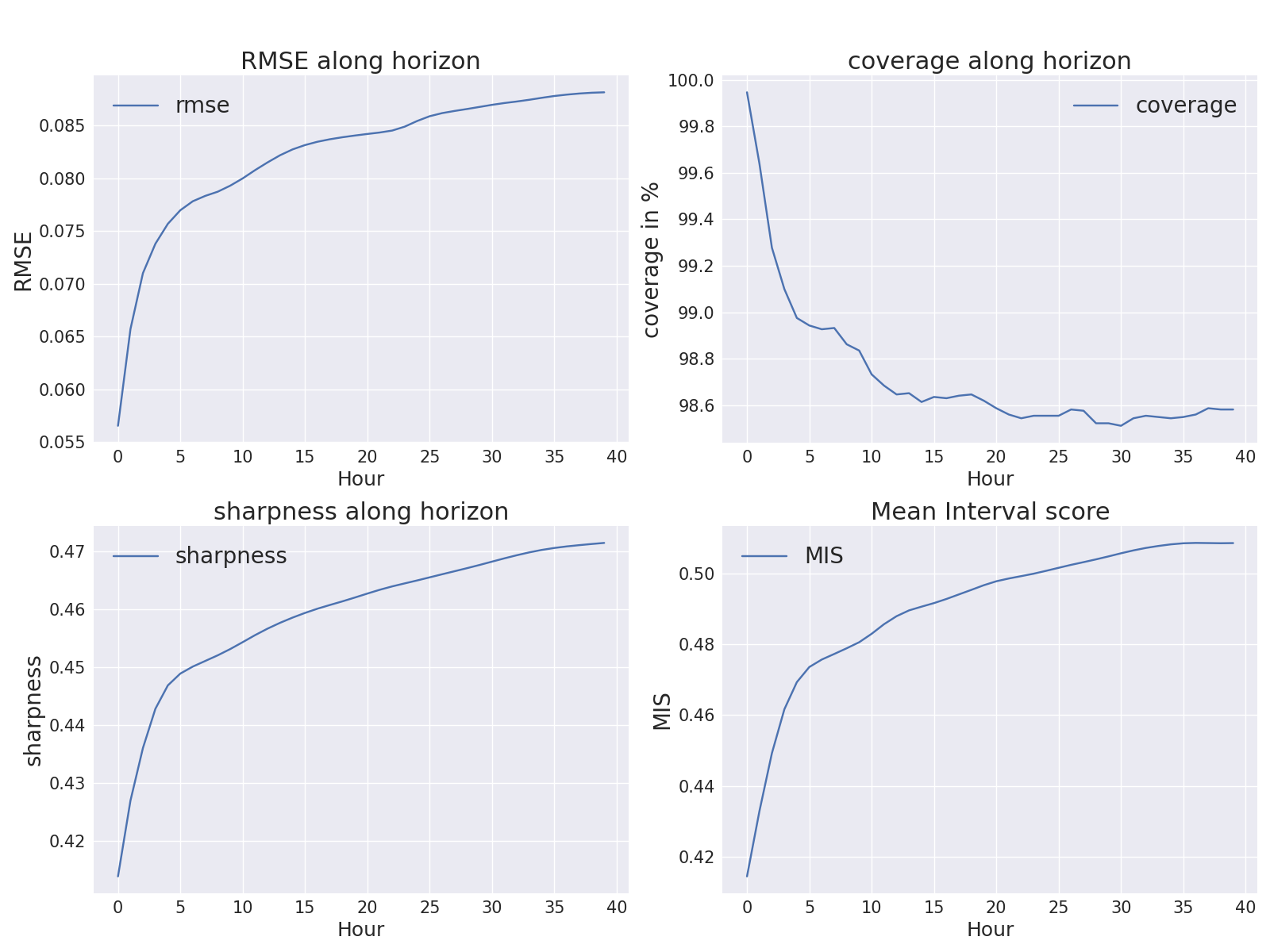

Metrics plot with results of evaluation

Metrics plot with results of evaluation

Summarized Description of Performance Metrics

If you need more details on which deterministic and probabilistic metrics we take to quantify if the produced forecast is considered “good” or “bad”, please take a look at the Tutorial Notebook.

Reference Documentation

If you need more details, please take a look at the reference for this script.